VMware vSphere 7.x Study Guide for VMware Certified Professional – Data Center Virtualization certification. This article covers Section 1: Architectures and Technologies. Objective 1.3.2 – Explain the importance of advanced storage configuration (VASA, VAAI, etc.)

This article is part of the VMware vSphere 7.x - VCP-DCV Study Guide. Check out this page first for an introduction, disclaimer, and updates on the guide. The page also includes a collection of articles matching each objective of the official VCP-DCV.

Explain the importance of advanced storage configuration

In this objective, it is required to explain the importance of advanced storage configuration. This mainly includes Storage Providers, a.k.a VASA, and Hardware Acceleration a.k.a. VAAI, for both block and file storage. Also, critical to study is pluggable storage architecture and path management.

1. vSphere APIs for Storage Awareness (VASA)

A storage provider is a software component that is offered by VMware or developed by a third party through vSphere APIs for Storage Awareness (VASA). The storage provider can also be called VASA provider. The storage providers integrate with various storage entities that include external physical storage and storage abstractions, such as vSAN and vVols. Storage providers can also support software solutions, for example, I/O filters.

Note: VASA becomes essential when you work with vVols, vSAN, vSphere APIs for I/O Filtering (VAIO), and storage VM policies.

1.1 VASA Highlights and Benefits

- VASA is either supplied by third-party vendors or offered by VMware.

- It enables communications between the vCenter Server and underlying storage.

- Through VASA, storage entities can inform vCenter Server about their configurations, capabilities, and storage health and events.

- VASA delivers VM storage requirements from the vCenter Server to a storage entity and ensures that the storage layer meets the requirements.

- Generally, vCenter Server and ESXi use the storage providers to obtain information about storage configuration, status, and storage data services offered in your environment. This information appears in the vSphere Client.

- The information helps you to make appropriate decisions about virtual machine placement, to set storage requirements, and to monitor your storage environment.

- Information that the storage provider supplies can be divided into the following categories: Storage data services and capabilities, storage status, and storage DRS information for the distributed resource scheduling on block devices or file systems.

1.2 Storage Providers Types

Persistence Storage Providers

Storage providers that manage arrays and storage abstractions are called persistence storage providers. Providers that support vVols or vSAN belong to this category. In addition to storage, persistence providers can provide other data services, such as replication.

Data Service Providers

Another category of providers is I/O filter storage providers or data service providers. These providers offer data services that include host-based caching, compression, and encryption.

Both persistence storage and data service providers can belong to one of these categories.

Built-in Storage Providers

Built-in storage providers are offered by VMware. Typically, they do not require registration. For example, the storage providers that support vSAN or I/O filters are build-in and become registered automatically.

Third-Party Storage Providers

When a third party offers a storage provider, you typically must register the provider. An example of such a provider is the vVols provider. You use the vSphere Client to register and manage each storage provider component.

The graphic above illustrates how different types of storage providers facilitate communications between vCenter Server and ESXi and other components of your storage environment. For example, the components might include storage arrays, vVols storage, and I/O filters.

1.3 Storage Provider Requirements and Considerations

To use storage providers, follow these requirements:

- Make sure that every storage provider you use is certified by VMware and properly deployed. For information about deploying the storage providers, contact your storage vendor.

- Make sure that the storage provider is compatible with the vCenter Server and ESXi versions. See VMware Compatibility Guide.

- Do not install the VASA provider on the same system as the vCenter Server.

- If your environment contains older versions of storage providers, existing functionality continues to work. However, to use new features, upgrade your storage provider to a new version.

- When you upgrade a storage provider to a later VASA version, you must unregister and reregister the provider. After registration, the vCenter Server can detect and use the functionality of the new VASA version.

2. vSphere APIs for Array Integration (VAAI)

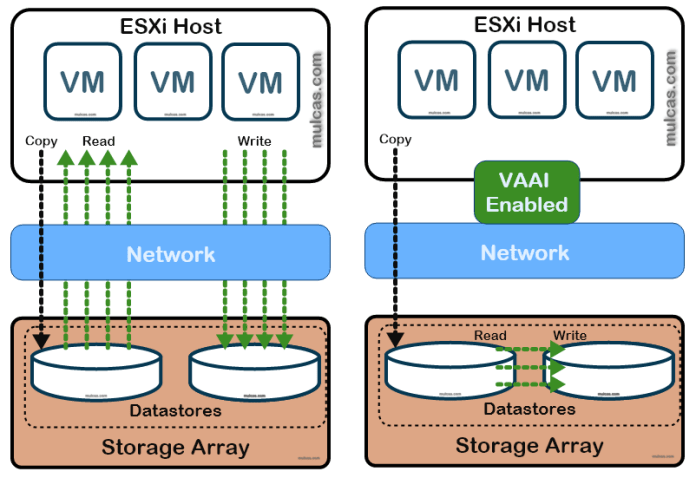

The hardware acceleration (or VAAI) functionality enables the ESXi host to integrate with compliant storage systems. The host can offload certain virtual machine and storage management operations to the storage systems. With the storage hardware assistance, your host performs these operations faster and consumes less CPU, memory, and storage fabric bandwidth.

Note: Block storage devices (Fibre Channel and iSCSI) and NAS devices support the hardware acceleration.

2.1 VAAI Benefits

When the hardware acceleration functionality is supported, the host can get hardware assistance and perform several tasks faster and more efficiently.

The host can get assistance with the following activities:

- Migrating virtual machines with Storage vMotion

- Deploying virtual machines from templates

- Cloning virtual machines or templates

- VMFS clustered locking and metadata operations for virtual machine files

- Provisioning thick virtual disks

- Creating fault-tolerant virtual machines

- Creating and cloning thick disks on NFS datastores

2.2 VAAI for Block Storage Devices

ESXi hardware acceleration for block storage devices supports the following array operations:

Full Copy

Full copy, also called clone blocks or copy offload. Enables the storage arrays to make full copies of data within the array without having the host read and write the data. This operation reduces the time and network load when cloning virtual machines, provisioning from a template, or migrating with vMotion.

Block Zeroing

Block zeroing, also called write same. Enables storage arrays to zero out a large number of blocks to provide newly allocated storage, free of previously written data. This operation reduces the time and network load when creating virtual machines and formatting virtual disks.

Hardware Assisted Locking

Hardware assisted locking, also called atomic test and set (ATS). Supports discrete virtual machine locking without the use of SCSI reservations. This operation allows disk locking per sector, instead of the entire LUN as with SCSI reservations.

Storage Space Reclamation (UNMAP)

ESXi supports the space reclamation command, also called SCSI unmap command, that originates from a VMFS datastore or a VM guest operating system.

- The command helps thin-provisioned storage arrays to reclaim unused space from the VMFS datastore and thin virtual disks on the datastore.

- The VMFS6 datastore can send the space reclamation command automatically. With the VMFS5 datastore, you can manually reclaim storage space.

- The command can also originate directly from the guest operating system.

- Both VMFS5 and VMFS6 datastores can provide support to the unmap command that proceeds from the guest operating system. However, the level of support is limited on VMFS5.

In addition to hardware acceleration support, ESXi includes support for array thin provisioning.

Array Thin Provisioning

ESXi supports thin provisioning for virtual disks. With the disk-level thin provisioning feature, you can create virtual disks in a thin format. For a thin virtual disk, ESXi provisions the entire space required for the disk’s current and future activities.

The ESXi host integrates with block-based storage and performs these tasks:

- The host can recognize underlying thin-provisioned LUNs and monitor their space use to avoid running out of physical space.

- The LUN space might change if, for example, your VMFS datastore expands or if you use Storage vMotion to migrate virtual machines to the thin-provisioned LUN.

- The host warns you about breaches in physical LUN space and about out-of-space conditions.

- The host can run the automatic T10 unmap command from VMFS6 and VM guest operating systems to reclaim unused space from the array.

- VMFS5 supports a manual space reclamation method.

2.3 VAAI on NAS Devices

The VAAI NAS framework supports both versions of NFS storage, NFS 3 and NFS 4.1. The VAAI NAS uses a set of storage primitives to offload storage operations from the host to the array. The following list shows the supported NAS operations:

Full File Clone

Supports the ability of NAS devices to clone virtual disk files. This operation is similar to the VMFS block cloning, except that NAS devices clone entire files instead of file segments. Tasks that benefit from the full file clone operation include VM cloning, Storage vMotion, and deployment of VMs from templates.

Fast File Clone

This operation, also called array-based or native snapshots, offloads the creation of virtual machine snapshots and linked clones to the array.

Reserve Space

Supports the ability of storage arrays to allocate space for a virtual disk file in the thick format.

Typically, when you create a virtual disk on an NFS datastore, the NAS server determines the allocation policy.

- The default allocation policy on most NAS servers is thin and does not guarantee backing storage to the file.

- The reserve space operation can instruct the NAS device to use vendor-specific mechanisms to reserve space for a virtual disk.

- You can create thick virtual disks on the NFS datastore if the backing NAS server supports the reserve space operation.

Extended Statistics

Supports visibility to space use on NAS devices. The operation enables you to query space utilization details for virtual disks on NFS datastores. The details include the size of a virtual disk and the space consumption of the virtual disk. This functionality is useful for thin provisioning.

2.4 VAAI Considerations

The VMFS data mover does not leverage hardware offloads and instead uses software data movement when one of the following occurs:

- The source and destination VMFS datastores have different block sizes.

- The source file type is RDM and the destination file type is non-RDM (regular file).

- The source VMDK type is eagerzeroedthick and the destination VMDK type is thin.

- The source or destination VMDK is in sparse or hosted format.

- The source virtual machine has a snapshot.

- The logical address and transfer length in the requested operation are not aligned to the minimum alignment required by the storage device. All datastores created with the vSphere Client are aligned automatically.

- The VMFS has multiple LUNs or extents, and they are on different arrays

3. Pluggable Storage Architecture and Path Management

These are key concepts behind the ESXi storage multipathing.

3.1 Pluggable Storage Architecture (PSA)

PSA is an open and modular framework that coordinates various software modules responsible for multipathing operations.

PSA allows storage partners to create and deliver multipathing and load-balancing plug-ins that are optimized for each array. Plug-ins communicate with storage arrays and determine the best path selection strategy to increase I/O performance and reliability from the ESXi host to the storage array.

When coordinating the VMware native modules and any installed third-party MPPs, the PSA performs the following tasks:

- Loads and unloads multipathing plug-ins.

- Hides virtual machine specifics from a particular plug-in.

- Routes I/O requests for a specific logical device to the MPP managing that device.

- Handles I/O queueing to the logical devices.

- Implements logical device bandwidth sharing between virtual machines.

- Handles I/O queueing to the physical storage HBAs.

- Handles physical path discovery and removal.

- Provides logical device and physical path I/O statistics.

As the Pluggable Storage Architecture illustration above shows, multiple third-party MPPs can run in parallel with the VMware NMP or HPP. When installed, the third-party MPPs can replace the behavior of the native modules. The MPPs can take control of the path failover and the load-balancing operations for the specified storage devices.

3.2 VMware Native Multipathing Plug-In (NMP)

By default, ESXi provides an extensible multipathing module called Native Multipathing Plug-In (NMP).

Generally, the VMware NMP supports all storage arrays listed on the VMware storage HCL and provides a default path selection algorithm based on the array type. The NMP associates a set of physical paths with a specific storage device, or LUN.

Typically, the NMP performs the following operations:

- Manages physical path claiming and unclaiming.

- Registers and de-registers logical devices.

- Associates physical paths with logical devices.

- Supports path failure detection and remediation.

- Processes I/O requests to logical devices:

- Selects an optimal physical path for the request.

- Performs actions necessary to handle path failures and I/O command retries.

- Supports management tasks, such as reset of logical devices.

For additional multipathing operations, the NMP uses submodules, called SATPs and PSPs. The NMP delegates to the SATP the specific details of handling path failover for the device. The PSP handles path selection for the device.

3.3 Path Selection Plug-Ins and Policies (PSPs)

A submodule of NMP. VMware Path Selection Plug-ins (PSPs) are responsible for selecting a physical path for I/O requests.

The plug-ins are submodules of the VMware NMP. The NMP assigns a default PSP for each logical device based on the device type. You can override the default PSP. For more information, see Change the Path Selection Policy.

Each PSP enables and enforces a corresponding path selection policy.

VMW_PSP_MRU - Most Recently Used

The Most Recently Used (VMware) policy is enforced by VMW_PSP_MRU.

- It selects the first working path discovered at system boot time.

- When the path becomes unavailable, the host selects an alternative path.

- The host does not revert to the original path when that path becomes available.

- The Most Recently Used policy does not use the preferred path setting.

- This policy is default for most active-passive storage devices.

VMW_PSP_FIXED - Fixed

This Fixed (VMware) policy is implemented by VMW_PSP_FIXED.

- The policy uses the designated preferred path.

- If the preferred path is not assigned, the policy selects the first working path discovered at system boot time.

- If the preferred path becomes unavailable, the host selects an alternative available path. The host returns to the previously defined preferred path when it becomes available again.

- Fixed is the default policy for most active-active storage devices.

VMW_PSP_RR - Round Robin

VMW_PSP_RR enables the Round Robin (VMware) policy.

- Round Robin is the default policy for many arrays.

- It uses an automatic path selection algorithm rotating through the configured paths.

- Both active-active and active-passive arrays use the policy to implement load balancing across paths for different LUNs.

- With active-passive arrays, the policy uses active paths.

- With active-active arrays, the policy uses available paths.

- The latency mechanism that is activated for the policy by default makes it more adaptive.

- To achieve better load balancing results, the mechanism dynamically selects an optimal path by considering the following path characteristics:

- I/O bandwidth

- Path latency

3.4 VMware Storage Array Type Plug-ins (SATPs)

Storage Array Type Plug-ins (SATPs) are responsible for array-specific operations. The SATPs are also submodules of the VMware NMP.

ESXi offers a SATP for every type of array that VMware supports. ESXi also provides default SATPs that support non-specific active-active, active-passive, ALUA, and local devices.

ESXi includes several generic SATP modules for storage arrays.

VMW_SATP_LOCAL

SATP for local direct-attached devices.

VMW_SATP_LOCAL supports the VMW_PSP_MRU and VMW_PSP_FIXED path selection plug-ins, but does not support VMW_PSP_RR.

VMW_SATP_DEFAULT_AA

Generic SATP for active-active arrays.

VMW_SATP_DEFAULT_AP

Generic SATP for active-passive arrays.

VMW_SATP_ALUA

SATP for ALUA-compliant arrays.

Resources

Conclusion

The topic reviewed in this article is part of the VMware vSphere 7.x Exam (2V0-21.20), which leads to the VMware Certified Professional – Data Center Virtualization 2021 certification.

Section 1 - Architectures and Technologies.

Objective 1.3.2 – Explain the importance of advanced storage configuration (VASA, VAAI, etc.)

See the full exam preparation guide and all exam sections from VMware.